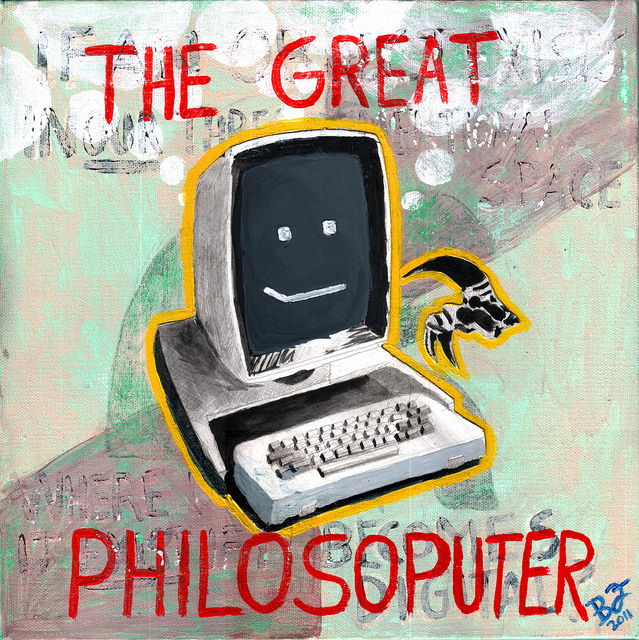

Artwork by Braydon Fuller via Post-Software.

Aeon published a long reflection on the possibilities of emergent consciousness, written by George Musser. In the essay he noted:

“Even systems that are not designed to be adaptive do things their designers never meant. […] A basic result in computer science is that self-referential systems are inherently unpredictable: a small change can snowball into a vastly different outcome as the system loops back on itself.”

It’s the butterfly effect, in other words. A small change within a complex system will cause a cascade of new small changes that quickly add up to large changes. Thus computer programs can surprise their designers. Often they’re just buggy, but at other times they develop capabilities that are difficult not to anthropomorphize. Either computers are messing up — cute, maybe frustrating — or they’re stumping us with semblances of creativity. It’s a human impulse, to ascribe intent and meaning to any output comprised of symbols (for example, text or numbers).

I’m not a mathematician, an engineer, or a scientist. Like most of us, I don’t have the training to understand rudimentary AI. (I don’t have the aptitude either, but that’s a separate discussion.) It’s starting to scare me more and more. I’m still skeptical of x-risk, so that’s not my worry. To be honest, I’m anxious about becoming obsolete. It’ll be a long time before the kind of work that I do can be fully automated / algorithmized, but maybe humans who understand computers better than I do will be able to glue different smart programs together and perform my job with less human labor.

The economy is a complex system. Small gains in efficiency can ripple out to transform entire industries.